Image Data Handling

After I installed OpenCV I wanted to get started with some image manipulations. The concept is pretty simple with Computer Vision being defined as basically “the transformation of data from a still or video camera into either a decision or a new representation” bradski. OpenCV provides a certain procedure for loading the data of the images. So the first thing I did was get comfortable with some of the basic image handling protocol.

# include "stdio.h"

# include "highgui.h"

int main( int argc, char** argv ) {

IplImage* img = 0;

if( argc < 2 ) {

printf( "Usage: Accepts one image as argument\n" );

exit( EXIT_SUCCESS );

}

img = cvLoadImage( argv[1] );

if( !img ) {

printf( "Error loading image file %s\n", argv[1]);

exit( EXIT_SUCCESS );

}

cvNamedWindow( "Example1", CV_WINDOW_AUTOSIZE )

cvMoveWindow( "Example1", 100, 100 );

cvShowImage( "Example1", img );

cvWaitKey( 0 );

cvReleaseImage( &img );

cvDestroyWindow( "Example1" );

return EXIT_SUCCESS;

}

Get the code from my Git Repository

If you need help with Git, follow this quick introduction – Getting Started With Git

It’s pretty clear from here why OpenCV is so user friendly, it’s interface is notably intuitive. My impression of the highgui.h header thus far leads me to believe it is host to the display functions which post all of the image data, as it’s name “highgui” suggests.

IplImage* img = 0;

IplImage is a data structure, it is used to store image data. It got it’s name from it’s home, Intel, and it stands for Image Processing Library (IPL) Image. Here’s a taste of what it looks like.

typedef struct _IplImage {

int nSize; /* sizeof(IplImage) */

int ID; /* version (=0) */

int nChannels; /* Most of OpenCV functions support

1,2,3 or 4 channels */

int alphaChannel; /* Ignored by OpenCV */

int depth; /* Pixel depth in bits: IPL_DEPTH_8U,

IPL_DEPTH_8S, IPL_DEPTH_16S,

IPL_DEPTH_32S, IPL_DEPTH_32F

and IPL_DEPTH_64F are supported */

char colorModel[4]; /* Ignored by OpenCV */

char channelSeq[4]; /* Ditto */

int dataOrder; /* 0 - interleaved color channels,

1 - separate color channels.

cvCreateImage can only create

interleaved images */

int origin; /* 0 - top-left origin,

1 - bottom-left origin

(Windows bitmaps style) */

int align; /* Alignment of image rows (4 or 8).

OpenCV ignores it and uses

widthStep instead */

int width; /* Image width in pixels */

int height; /* Image height in pixels */

struct _IplROI *roi;/* Image ROI. If NULL, the whole

image is selected */

struct _IplImage *maskROI; /* Must be NULL */

void *imageId; /* " " */

struct _IplTileInfo *tileInfo; /* " " */

int imageSize; /* Image data size in bytes

(==image->height*image->widthStep

in case of interleaved data)*/

char *imageData; /* Pointer to aligned image data */

int widthStep; /* Size of aligned image row in bytes */

int BorderMode[4]; /* Ignored by OpenCV */

int BorderConst[4];/* Ditto*/

char *imageDataOrigin;/* Pointer to very origin of image data

(not necessarily aligned) -

needed for correct deallocation */

} IplImage;

Most of it is pretty intuitive, but I’m sure I’ll learn more about some of it’s more obscure data members soon. A problem I faced next was; given an image, how do I extrapolate all of that data? That’s where the next function came in.

IplImage* cvLoadImage(const char* filename, int iscolor);

filename - name of the file to be loaded

iscolor - Specifies colorness of the loaded image:

If >0, the loaded image is forced to be color 3-channel image;

If 0, the loaded image is forced to be grayscale;

If <0 or omitted, the loaded image will be loaded as is

with the number of channels depending on the file.

This is an impressive function. It parses through it's argument, interprets all the information, and fills in that IplImage structure with all the data and finally returning a pointer to the IplImage. It allocates the appropriate memory size and has support for the following formats

- Windows bitmaps - BMP, DIB

- JPEG files - JPEG, JPG, JPE

- Portable Network Graphics - PNG

- Portable image format - PBM, PGM, PPM

- Sun rasters - SR, RAS

- TIFF files - TIFF, TIF

Notice however that I assign it to img. img is a pointer to the IplImage. That data structure is large and bulky, so passing it around by value would be costly in overhead.

Alright, so I've got an image loaded into my program here, how do I output it? What I learned next is that have you create your own windows for data output.

int cvNamedWindow( const char* name, int flags );

name - Name of the window which is used as window identifier

and appears in the window caption.

flags - Flags of the window. Currently the only supported flag

is CV_WINDOW_AUTOSIZE. If it is set, window size is

automatically adjusted to fit the displayed image while

user can not change the window size manually.

The first parameter, the character array, is the name I used to refer to this exact window throughout the rest of the program, and the second is the windows size. There is something interesting to note here, the window variable name "Example1" is also the name that appears on the window after it is displayed... I haven't found any workarounds for this yet. I'm not sure if there is a need, but what if you want to make multiple windows with the same name, but need to refer to them independently throughout the program? Please let me know if you find a solution.

Now I noticed when the window appeared it was always in the corner... mathnathan doesn't like this... So a simple little move window function served me great here.

void cvMoveWindow( const char* name, int x, int y );

name - Name of the window to be relocated. x - New x coordinate of top-left corner y - New y coordinate of top-left corner

Remember, "Example1" is now the string literal I had to use to refer to that window. The next two parameters are ints. They simply refer to the (x, y) coordinates where the window's upper left corner will be.

I got an image loaded and a window up, so then I needed to put the image in the window. OpenCV provides a function which displays an IplImage* pointer in an existing window, in my case "Example1".

void cvShowImage( const char* name, const CvArr* image );

name - The name of the window. image - The image to be shown.

Now the image is displayed in the window I created. Hooray. Note, if the window was set with CV_WINDOW_AUTOSIZE flag, then the window will resize to fit the image, otherwise the image will be resized to fit in the window.

To make the window go away I simply waited for any key to be pressed.

int cvWaitKey( int delay=0 );

delay - The delay in milliseconds.

When given a parameter of less than or equal to 0, this function simply pauses the program and waits for a key to be pressed. Any positive integer will pause the program for that many milliseconds. This is the only function within highgui for event handling.

Being the good little C programmer that I am, I freed the allocated memory used to hold the image.

void cvReleaseImage( IplImage** image );

image - The IplImage structure you wish to be released.

Be sure to take note that this function expects a pointer to the IplImage*. So you should submit the address using the reference operator ' & '.

Lastly I destroyed the window.

void cvDestroyWindow( const char* name );

name - The name of the window you wish to destroy.

I'm quite fond of this function, they really gave it a great name. Programming suddenly got way more fun!

Compiling

The program is ready to go, but building it wasn't as easy a gcc myprogram.c it required linking to the OpenCV libraries. This can be a nuisance, however OpenCV makes linking to their stuff a breeze when using CMake. If you're new to CMake and would like a quick introduction, check out my Getting Started with CMake tutorial. When I built OpenCV from source in the previous post, it produced an OpenCVConfig.cmake file (making it easy!) for me to put into my CMakeLists.txt file.

cmake_minimum_required(VERSION 2.6)

project(examples)

include($ENV{OpenCV_DIR}/OpenCVConfig.cmake)

include_directories(${OPENCV_INCLUDE_DIR})

add_executable(example1 example2-1.cpp)

target_link_libraries(example1 ${OpenCV_LIBS})

Plain and simply that's all there was too it. I saved that as my CMakeLists.txt file, moved into the build directory, ran cmake, then make, and tested my program with some sample pictures. SUCCESS!

Applying a Gaussian Transformation

Using my new found image handling skills I attempted to smooth an image using cvSmooth(). There are a few minor additions to the previous program.

# include "stdio.h"

# include "highgui.h"

# include "cv.h"

int main( int argc, char** argv ) {

IplImage* img = 0;

IplImage* out = 0;

if( argc < 2 ) {

printf( "Usage: Accepts one image as argument\n" );

exit( EXIT_SUCCESS );

}

img = cvLoadImage( argv[1] );

if( !img ) {

printf( "Error loading image file %s\n", argv[1]);

exit( EXIT_SUCCESS );

}

out = cvCreateImage( cvGetSize(img), IPL_DEPTH_8U, 3 );

cvNamedWindow( "Example1", CV_WINDOW_AUTOSIZE );

cvMoveWindow( "Example1", 100, 100 );

cvNamedWindow( "Output", CV_WINDOW_AUTOSIZE );

cvMoveWindow( "Output", 300, 100 );

cvShowImage( "Example1", img );

cvSmooth( img, out, CV_GAUSSIAN, 3, 3 );

cvShowImage( "Output", out );

cvWaitKey( 0 );

cvReleaseImage( &img );

cvReleaseImage( &out );

cvDestroyWindow( "Example1" );

cvDestroyWindow( "Output" );

return EXIT_SUCCESS;

}

Get the code from my Git Repository

If you need help with Git, follow my quick introduction - Getting Started With Git

First off, I added cv.h into the mix here. This is where I found all of my Computer Vision toys. To be able to see the before and after I created another pointer to an IplImage* data structure to store the transformed data which I called, out. I learned another method for creating an image without using the cvLoadImage() function, which I garnished here.

IplImage* cvCreateImage( CvSize size, int depth, int channels );

size - The images width and height

depth - Bit depth of image elements. Can be one of:

IPL_DEPTH_8U - unsigned 8-bit integers

IPL_DEPTH_8S - signed 8-bit integers

IPL_DEPTH_16U - unsigned 16-bit integers

IPL_DEPTH_16S - signed 16-bit integers

IPL_DEPTH_32S - signed 32-bit integers

IPL_DEPTH_32F - single precision floating-point numbers

IPL_DEPTH_64F - double precision floating-point numbers

channels - Number of channels per element, pixel, in the image.

I got the size automatically using cvGetSize.

CvSize cvGetSize( const CvArr* arr );

arr - The array header

The function cvGetSize returns number of rows (CvSize::height) and number of columns (CvSize::width) of the input matrix or image. If I were to have the *roi pointer pointing to a subset of the original image, the size of ROI (Region Of Interest) is returned.

The next parameter is an int, and is the bit depth of the image elements. Lastly it wants the number of channels for the image. That is the number of channels per element (pixel), which can be 1, 2, 3 or 4. The channels are interleaved, for example the usual data layout of a color image is: b0 g0 r0 b1 g1 r1... Although in general IPL image format can store non-interleaved images as well and some of OpenCV can process it, cvCreateImage() creates interleaved images only.... How sad... ![]()

And finally here is the transformation.

void cvSmooth( const CvArr* src,

CvArr* dst,

int smoothtype=CV_GAUSSIAN,

int param1=3,

int param2=0,

double param3=0 );

src - The source image, to be smoothed.

dst - The destination image, where to save the image.

smoothtype - Smoothing algorithm to be employed

CV_BLUR_NO_SCALE (simple blur with no scaling) - summation over a

pixel param1×param2 neighborhood. If the neighborhood size may

vary, one may precompute integral image with cvIntegral function.

CV_BLUR (simple blur) - summation over a pixel param1×param2

neighborhood with subsequent scaling by 1/(param1•param2).

CV_GAUSSIAN (gaussian blur) - convolving image with param1×param2

Gaussian kernel.

CV_MEDIAN (median blur) - finding median of param1×param1

neighborhood (i.e. the neighborhood is square).

CV_BILATERAL (bilateral filter) - applying bilateral 3x3 filtering

with color sigma=param1 and space sigma=param2.

param1 - The first parameter of smoothing operation.

param2 - The second parameter of smoothing operation. In case

of simple scaled/non-scaled and Gaussian blur if param2

is zero, it is set to param1.

param3 - n case of Gaussian parameter this parameter may specify

Gaussian sigma (standard deviation). If it is zero, it is

calculated from the kernel size:

sigma = (n/2 - 1)*0.3 + 0.8

n=param1 for horizontal kernel

n=param2 for vertical kernel.

Using standard sigma for small kernels (3×3 to 7×7) gives

better speed. If param3 is not zero, while param1 and param2

are zeros, the kernel size is calculated from the sigma.

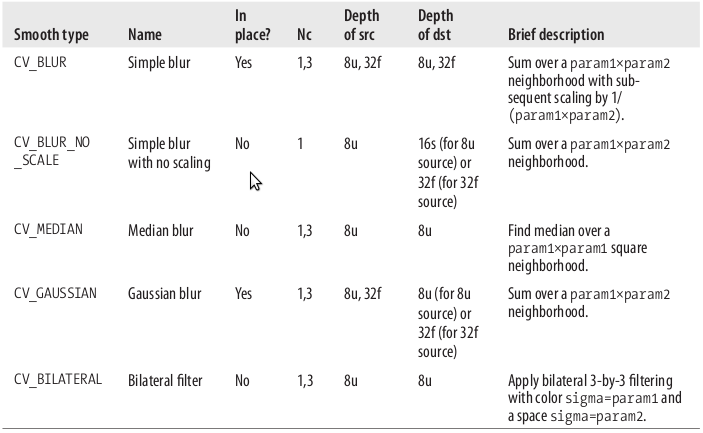

Your source image and destination image are self explanatory, the next parameter is your smoothing algorithm and

the other 3 parameters depend on the smooth type, here is another reference to the various smooth types. brasdki

I then displayed the output image in the "Output" window and was a good little C programmer and cleaned up after myself.

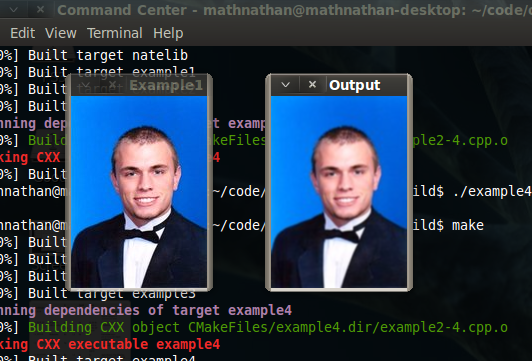

So those are the changes in the new program. It takes an image as input, makes a copy, creates two windows, displays one image, runs the other through a smoothing filter, then displays that one aswell! Easy stuff. Here is the output I received after running a picture of myself through the filter. COOL!!

References

I'm studying from Gary Bradski's Learning OpenCV