The depth array that comes in from the Kinect has a precision of up to 11 bits or different values. The Kinect is just a sensor, or measurement tool, and every scientist wants to know the limitations of their equipment. So the obvious questions are, what is the accuracy of these measurements? What do these values represent in physical space?

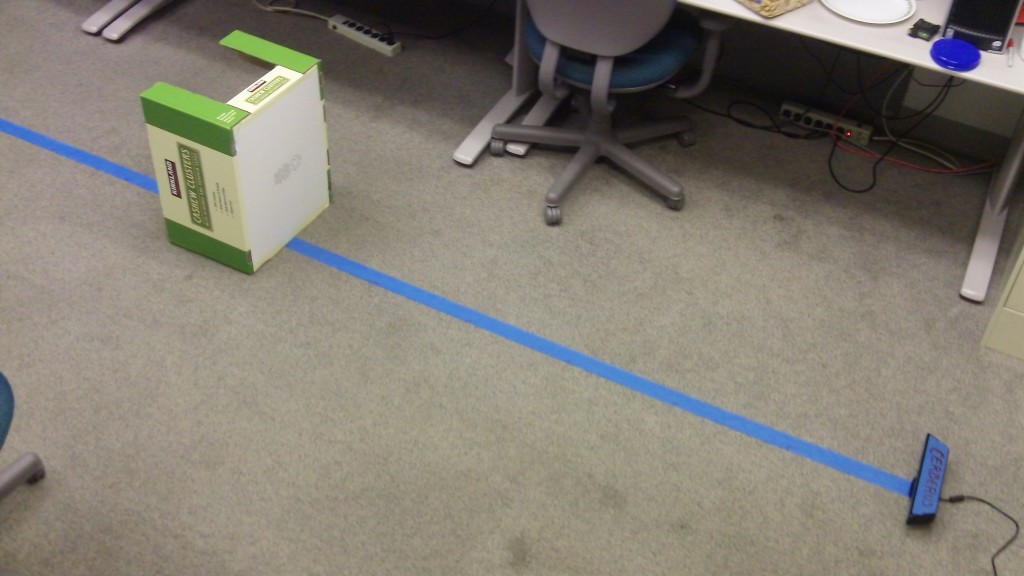

I conducted a very simple experiment to answer these questions. I placed the Kinect on the floor and also a long piece of tape orthogonal to the Kinect’s field of view. I then marked measurements along the piece of tape from 1ft – 10ft from the sensor. I placed a cardboard box in the center of the Kinect’s field of view for each hash, from 2ft all the way back to 10ft (1ft was within the minimum triangulation distance for Primesense’s PS1080-A2 SoC image processor). The experiment looked like this.

For each measurement I saved the rgb image, a color mapped depth image, and a text file containing the raw values from the depth array of the ROI containing the box. I used the color mapped depth image to reference my measurements and give me a little more confidence in their accuracy. The Kinect’s depth measurements within the ROI were all very similar, the maximum deviation from the mean is 2 for all values along the planar surface of the box. I averaged those values to come up with my measurement for each distance.

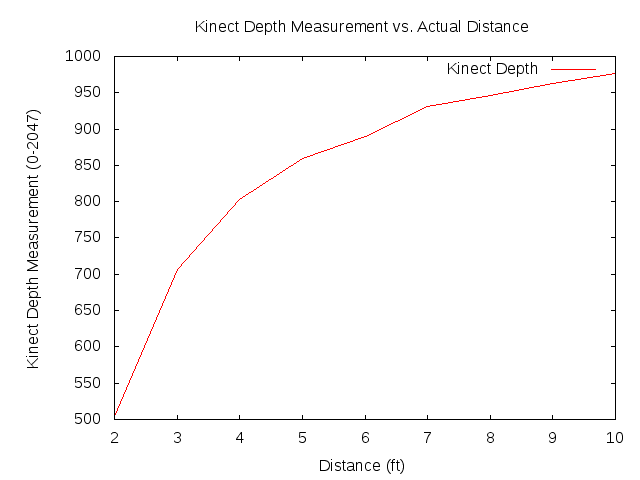

My findings tell me that the Kinect’s depth measurement as a function of distance is not linear… It appears to follow a logarithmic scaling. This means that the further you get from the sensor the less detailed depth information you’ll get about objects.

There is no interpolation or further calculations done on the graph above, it is simply a line graph. The actual results are shown below.

| 2 feet | 504 |

| 3 feet | 706 |

| 4 feet | 803 |

| 5 feet | 859 |

| 6 feet | 890 |

| 7 feet | 931 |

| 8 feet | 946 |

| 9 feet | 963 |

| 10 feet | 976 |

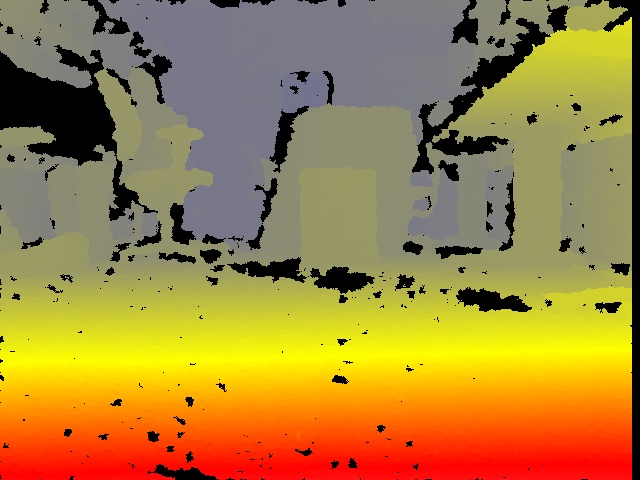

One sees the precision is significantly reduced with distance. The depth image shows the slow decline in color gradient the further away things get. Below, you can see the color mapped depth image and the rgb image as seen by the Kinect at the 10 ft hash. One sees that the box has roughly the same color as the majority of the objects at the same depth or further. This makes it difficult to discern meaningful information from objects at a depth of 10 feet or more.

I shared these results with my professor Dr. Xiuwen Liu at Florida State University. Below are the slides he made to share this information with his Computer Vision course CAP5415.

Conclusion

For my research I plan to register point clouds from multiple Kinects. If I take the findings above as true, it means that I will need to consider the relative depth of objects from each sensor when using many Kinects to reduce noise in the measurements.

*UPDATE* Playing with the Kinect has always been a hobby. My research has taken me into a very different field, and I am not savvy with the latest and greatest Kinect ideas/libraries/tools. I would not consider myself an authoritative figure on Kinect matters.

Thanks for this, this is useful for pre-normalizing the depth image spat out of the kinect.

Thanks for the post! I was actually thinking about doing something similar soon. You didn’t mention which driver you are using. Is it freenect or OpenNI? It doesn’t look like it from the images, but have you done the image registration+rectification before getting measurements?

Ah yes, for this test I used the libfreenect driver. That is a good point however, I think testing the OpenNI driver would provide some interesting results to compare against. I have OpenNI installed, I’ll do it soon! I did no registration/calibration or any other calculations before acquiring those measurements. They’re raw from the depth buffer.

“marf” has a formula for converting the raw depth data to cm.

http://vvvv.org/forum/the-kinect-thread

Since the connect paints infrared dots and then reads them, multiple kinects seem to get confused with overlapping dots so the more kinects the more noise that gets introduced.

Hi Nathan,

I actually did the same experiment like you do, but i dont get graph exactly like you have. What driver you are using?

Opps sorry, I didnt read all of comment. Ok, you are using libfreenect driver right? Can you share your code with me? I actually use OpenNI driver. May be that’s will be the big difference.

Hey Kufaisal!

I’ve played with the OpenNI driver a bit as well. I personally have chosen the libfreenect for it’s simplicity, and the fact that it’s open source! I’d be happy to share my code with you, when I get back into the lab tomorrow I’ll post a link to it here at the bottom of the post. Thanks for reading!

I’m curious, even if your graph didn’t look similar, did your results suggest similar behavior?? Around what depth did your measurements begin to lose accuracy?

Cheers!

Hi thanks for the post, I am working with the kinect camera, and i have a doubt regarding the depth value (the one of 11bits), how do you get it?? is there a method to get it?? i am using de freenect library and there isn´t any property of the kinect or depthcamera class refering to the Depth value of certain pixel,

hope someone help me clarify this!

Hey David!

The c_sync wrapper for the libfreenect driver provides a function…

int freenect_sync_get_depth(void **depth, uint32_t *timestamp, int index, freenect_depth_format fmt);

This allows you to access it directly. It will store the depth into the void** depth pointer. You can use it like this

short *depth = 0;

char *rgb = 0;

uint32_t ts;

freenect_sync_get_depth((void**)&depth, &ts, 0, FREENECT_DEPTH_11BIT);

freenect_sync_get_video((void**)&rgb, &ts, 0, FREENECT_VIDEO_RGB);

It returns a negative if it fails to access the data from the Kinect. I hope that helps, good luck!

Very good article. I’m very interested in seeing any data from real world data from real time systems. I wonder if doing stereo vision with these could reduce the total error. I know there would be interference from the other kinect, but I’m wonder if they had a shared timer and a strobe between the two so there is no interference.

Anthony, thank you! I’m certain using multiple cameras would reduce the total error. I wrote an honors thesis on registering multiple kinects, the interference is inconsequential when dealing with only two sensors. Here is a short demonstration.

Hi Nathan,

Great experiment you did there! Can you share some knowledge on collecting 3D information from Kinect using libfreenect?

I encountered several examples on collecting the depth map using freenect_sync_get_depth, such as cvDemo.c in OpenKinect package:

freenect_sync_get_depth((void**)(&depthData), ×tamp, 0, FREENECT_DEPTH_11BIT);

…

int lb = ((short*)depthImage->imageData)[i] % 256;

int ub = ((short*)depthImage->imageData)[i] / 256;

…

switch (ub)

{

case 0:

depth_mid[3*i+2] = 255;

depth_mid[3*i+1] = 255-lb;

depth_mid[3*i+0] = 255-lb;

break;

case 1:

depth_mid[3*i+2] = 255;

depth_mid[3*i+1] = lb;

depth_mid[3*i+0] = 0;

break;

…..

What do lb and ub represent? What information is stored in depth_mid and the logic behind the above formula for depth_mid?

One big question is: how to calculate real world x,y,z from depthData above?

Upon researching information, I saw some sources advising that we should sync/map the depth map and rgb map (from freenect_sync_get_video), that the depth map cannot be left as-is as it is 11bits (should change it to 14 (or 16) bits). Do you have any idea about these?

Do you need to calibrate the Kinect? Are you following this guide?

http://nicolas.burrus.name/index.php/Research/KinectCalibration

I am quite confuses on how to use Kinect with Windows (Linux guys have more packages). Various sources show different instructions (there are many drivers as well), yet all stop at the step where they show how to install the drivers and run the example; no guide on algo and development for Windows.

Thanks in advance!

Ken

Ken,

I can understand how you may be confused. There is a lot of information out there and its difficult to find the information that is right for you.

As for the example you provided. It looks like that is a portion of color mapping code for the depth image. What does this mean? Recall the depth array is an array of 11 bit elements giving a range from 0-2047. If you try to visualize this depth, you’ll get a black and white image. What the above is doing is chunking the 2048 values into 2048/256=-8 regions. The different cases (1-8) are the different regions, then within these 8 regions they are fading the color “255-lb”, for each of the 3 color channels Red, Green, Blue, and storing it in the depth image “depth_mid[3*i+0] = 255-lb;”. (+0, +1, and +2 are the different color channels). This way you can simulate depth as a collection of color gradients!

Typically you’ll have front, mid, and back images. This is a way of buffering your frames and keeping track of where they are in the capture->format->render process. Freshly captured images from your sensors are usually the back image, then once you begin formatting it (computer vision analysis, or color augmentation, anything really) then it is saved in the mid image, and a new image is captured and stored in back. Finally the mid image is pushed to the front to be displayed, the back image is pushed to the mid for processing, and a new back image is captured from your sensor. This is just one of many ways to buffer your frames. This is done for various reasons – processing time, hardware limitations, prevent lag/frames not finishing rendering. Anyway, this summer I hope to write a new comprehensive computer vision tutorial using the Kinect and discussing frequently used procedures, jargon, and other fundamentals to help people who are trying to get started in computer vision.

Converting the Kinect image to real world coordinates is a process known as Stereopsis. Stereopsis is how the human eyes perceive depth. It is probably one of the most fundamental and important principles in computer vision, and you’d benefit tremendously by putting in the time to sit down and figure it out. To understand the derivation and motivation behind the method is not easy, and involves lots of linear algebra. Again however, it is paramount to ascertaining a complete grasp on 3D scene reconstruction. Here is a good place to start reading about it. If you understand this thoroughly, then you can move on to a more rigorous derivation.

Thank you for reading my post and giving me feedback, I hope this was helpful to you. Let me know if you have any other questions, or just keep me updated on some of the fun stuff you’re doing!

Cheers

Is it possible to actually get a raw distance feedback from the kinect. I’m running on linux.

Yes, using any kinect driver it is possible to acquire the raw distance. Depending on the driver though, you may only be getting the disparity which requires a calculation to get the actual physical distance. The libfreenect driver is one of those drivers.

Im actually quite new to this thing. And i had Nite installed as well. But im not sure which modules i should run in order to get distance values.

Hi nathan, nice post… I am working on the Kinect as well and i am using the libfreenect like you. I would like to know how to get the distance values. Can u explain more in detail? i am just like cheyanne… really need your help… any help provided will be appreciated… please

Hi, it would nice of you to post the code for the distance calculation.

Hi,

really sorry to disturb you… i have tried your function but in glview.c, the one they give as an examples in the libfreenect has this function glutMainloop() which once called never returns.

how did u go about to get the data? i can’t get the data because of the glutMainloop() function…

Hi Nathan,

Very much appreciate you taking time to reply me!

I did read a little of stereo vision from [Zhang], Learning OpenCV and Multipleview Geometry [Zisserman]. However, is stereo vision and epipolar geometry geometry for stereo camera, which is passive sensors, where we need stereo correspondence for disparity? Please correct me if I am wrong. In Kinect case, we are having active sensor, I think we dont use stereo correspondence or epipolar geometry, is it?

I wonder what the raw depth value (0-2047) means for Kinect. Is it the disparity or something else? How to convert it into x,y,z value? Btw did you follow the calibration using Nicolas’s page I mentioned above, or use the default yml file from ROS that he provides? Are you developing in Linux or Windows? Have you tried mrpt? Which one would you recommend, mrpt or ros(opensource implementation) , libfreenect or OpenNI (driver)?

Thanks!

Ken.

Cheyanne,

Sorry for the tardy replies. I just started a new internship and have been dedicating a lot of my time to it!

I’ll be honest with you, I’m not very familiar with the OpenNI driver. I played around with it a few times, but never found a need to wade through the bad documentation when the libfreenect driver was available. Best of luck!

Lakshmen,

No problem! Again sorry for the tardy reply. So accessing the depth matrix, and visualizing it are very different things. If all you’re looking for is to access the depth frames to perform some sort of analysis, then steer clear from OpenGL and the ‘ glutMainloop() ‘. What I would suggest, is that you compile the OpenCV wrapper for libfreenect. OpenCV is hands down the best computer vision library available. So if you can get the Kinect depth and intensity images in an OpenCV Mat, then things will be set up nicely for you. If you have the OpenCV wrapper installed, it’s a simple call to

Mat rgb_frame = freenect_sync_get_rgb_cv(cameraIndx) ;

Mat depth_frame = freenect_sync_get_depth_cv(cameraIndx) ;

to access the Kinect data. (cameraIndx is for multiple Kinects)

If you want to see the OpenCV wrapper in action, you can take a look at my registration program. I use it to get data from 2 Kinects in the ‘loadBuffers’ function and register them into one coordinate system.

Give the OpenCV wrapper a shot and take a look at the OpenCV sample code in libfreenect, then let me know how it works out for you!

Ken,

Excellent! I’m glad to hear you’re researching more about stereo vision on your own. Those are good sources, I have Zisserman’s book! As to your first point, you’re on the right track. The Kinect does most of the work for us when is gets the disparity values (0-2047). However to find the spacial z coordinates takes a little bit more work. Take a look at this slide show that a professor here at FSU put together. The d value on the 3rd slide is the disparity, to find the actual depth requires some camera parameters as the formula shows. This is more-or-less what can be done with the essential matrix ascertained from the 8 Point algorithm.

The data that Nicolas used for calibration is the same as the results from this experiment, so our calibrations are the same. I develop in Linux, Ubuntu 10.xx and 11.04. I have not used mrpt, I have found that the ROS has been more than adequate for all my needs. Up until very recently I would have suggested you use libfreenect, however after the Point Cloud Library won my heart over in the lab today, I have to confidently suggest you use that for all of your point cloud/kinect needs!

I sure am glad I stumbled across your website – your comments are very insightful, especially the rationale for using three buffers (I’m a programmer with no video or computer vision background).

I would like to ask if you understood and/or could explain the use of their gamma array in glview.c:

for (i=0; i<2048; i++) {

float v = i/2048.0;

v = powf(v, 3)* 6;

t_gamma[i] = v*6*256;

}

I understand the general idea is they want to have some color representation for depth, but it looks like the formula is: gamma[i] = (i / 2048)^3 * 6 * 6 * 256

How did they come up with that?

Then elsewhere, as someone posted earlier, they use the gamma value based on the depth:

int pval = t_gamma[depth[i]];

int lb = pval & 0xff;

…

but they only handle the low byte; doesn't this mean they are reducing the depth accuracy by at most 3 bits (11bit depth image?) ? i.e. couldn't this result in same colors for objects at vastly different depths?

I believe many other beginners in this area would find a comment/post on this code most insightful. I know I would…

How can you make sure that ir-camera plane is exactly align with line where you put kinect on it.

Personally, I think output is different from every kinect and misalignment.

Cheers.

Hi Nathan,

Thank you for sharing these information. I have done a similar experiment , and I find it never return a depth value less than 500. I want to know that is there a way to get the depth value within 500cm?

Thanks!

Wentao Liu

Nathan,

A splendid experiment indeed. I too use libfreenect libraries to access raw depth data from Kinect. I am trying to track human movements from the kinect depth images. However, as a calibration I need the raw depth data to be converted to millimetres. I came across a post which says,

for (size_t i=0; i<2048; i++)

{

const float k1 = 1.1863;

const float k2 = 2842.5;

const float k3 = 0.1236;

const float depth = k3 * tanf(i/k2 + k1);

t_gamma[i] = depth;

}

Does the array in t_gamma[] give the actual depth in metres? I am not sure what calibration is being done. If this is not so, how do I convert the raw depth data from Kinect to millimetres. Kindly help!!

Cheers,

Sundar

Pingback: The physics of the Kinect | MrReid.org

Pingback: Hull BLoG » Kinect with Depth Image Device

Is there a kinect sensor in mobile size ,, if not do you know if they’re working on one ?

“Mobile size” is a relative term. Technically different teams of people have already extracted the core hardware from the Kinect and mounted it on robots/quadrocopters essentially making it quite mobile.

really good experiment!

Would like to ask one thing; in your posts and comments you mentioned about Point Cloud Library, I am using this Library. In this they are using OpenNI driver.

My Q’n is when I save data from kinect in ‘.pcd’ file format It gives me information about X, Y, Z and rgb. Out of these X, Y and Z(depth) are saved in real world coordinates and unit is ‘meters’?

Is it right, what I understood?

Cheers!!!

This post confused me when I first started getting into the Kinect a few months ago. When I did some initial online research, I found this page and got horribly confused — it refers to the 11-bit values coming from the Kinect as “depths.”

I’ve now had considerably more experience with the Kinect and I just ran across this post again. For future readers, it might be a good idea to clarify that the 11-bit values coming from the Kinect are actually “shifted disparity” values and NOT “depth.” (As mentioned in the slide presentation at the end of the post.)

(As mentioned in the slide presentation at the end of the post.)

It also seems to me that while the idea of the post is correct (accuracy DOES fall off with distance), this is really just a direct consequence of the physics of the disparity equation, and of course the imprecision of the quantized values computers use: As the disparity gets smaller (or, equivalently, as the “shifted disparity” gets larger), the difference between the disparity values yields larger and larger differences in depth values.

Pingback: Deapth Using kincet | Camera World

Hi Nathan!

I would like to know where you got the formula used to calculate from raw data to real depth. And what is the value 1091.5?

Best regards

Hey Javi,

The raw data from the Kinect is the disparity. The actual depth, z, given the kinect disparity, d, is…

z = 34800/(1091.5-d)

and that is in cm.

Hi Nathan! Thanks for the reply.

What I would like to know is where comes from the number 1091.5.

I know that the number 34800 comes from: 7.5 * 580 * 8, where 7.5 is the baseline, 580 is the focal length of the IR camera, and 8 is due to subpixel accuracy.

But I don’t know where the number “1091.5″ comes from .

Thank you very much.

Hi Nathan! For me it is very important to justify the equation, for this reason I want to know where that number comes. It seems that the equation is based on the model of stereopsis: z = (b * f) / d

Cheers!

I haven’t read most of the comments. What you’ve rediscovered in your post is that triangulation accuracy falls off with distance. This fall off if logarithmic or actually tangential. There is a formula correction for this. See, for example, http://vvvv.org/forum/the-kinect-thread#comment-56141

Or, at Stéphane Magnenat at http://openkinect.org/wiki/Imaging_Information:

distance = 0.1236 * tan(rawDisparity / 2842.5 + 1.1863) in meters. Adding a final offset term of -0.037

Excellent, I actually used Stephane’s calibrations before I did my experiment and made my own. Thanks for the additional information Gary, and mostly thanks for writing Learning OpenCV! It was my computer vision bible when I was first getting started with this stuff. Cheers!

Hi Nathan,

Excellent job! How do you find the ROI in the matrix? Thanks.

Honestly, I just looked at a matrix of the raw data and visually inspected it to find the cluster. It would have been more “scientific” if I were to have applied some k-means clustering or something comparable first.

How do you measure objects or distances with Kinect? I’m very new at programming with kinect, i was wondering if i could get your code for measure distances

Do you have any idea where can i get an example code for measure distance with kinect?

Hi Nathan,

I am lost in a very easy point I guess.

I am trying to get a simple depth mesurement from the depth picture.

Here is where I don’t understand:

Let’s say I define the depth picture by a grid. An object is at 100,100 pixel.

it give me a raw data for that pixel of : A= 100 R= 125 G=125 and B =125 These are theoritical number. those are the only info I have been able to get from the Depth_camera_source.

You say the sensor as 11 bit resolution this give 0 to 2047, now how do I take those bytes in order to get this 11 bits information??

This was very interesting:

The raw data from the Kinect is the disparity. The actual depth, z, given the kinect disparity, d, is…

z = 34800/(1091.5-d)

But How does the raw data R G B byte does convert to give d?

Regards,

Dany B.

Sorry Digi, but I don’t have that code anymore. Depending of the library you use, it is really just a few lines. Take a look at some of the sample code for the library you choose to access it

Hey Danny. You do not get d from the RGB image. Those are two completely different spaces. The depth matrix has only 1 channel with each entry being a number from 0-2047. That is the disparity, which you use to calculate the actual depth from the camera. I would suggest you simply use the Point Cloud Library, it covers up all this low lever mumbo jumbo and lets you get straight to the point cloud analysis fun. Happy hacking!

Hey Nathan.

I am really new to Kinect.So pardon my ignorance on the matter. Are the calibrations that you and Gary mentioned similar to Stereo Calibration? I am working with OpenNI ( I built my OpenCV with OpenNI support) but would like to change over to libfreenect. Can you guide me to a few tuts on how to get OpenCV working with libfreenect (installation and programing).

Thanks.

Hi,

I’m using Kinect SDk and I need to see the visualization result of the “raw kinect data” the same as these guys have gotten here : http://www.youtube.com/watch?v=nYsqNnDA1l4&feature=related

I know my question is not so trelated to your article but as you are familiar with Kinect I would appreciate if you help me about that. Do you know how can I get these datas from Kinect SDK or any other softwares that you know?

Thanks in advance

Hi,

You have mentioned 2048 depth levels but there are only 1024 depth levels with one bit fixed for identifying a point as valid or invalid.