The depth array that comes in from the Kinect has a precision of up to 11 bits or different values. The Kinect is just a sensor, or measurement tool, and every scientist wants to know the limitations of their equipment. So the obvious questions are, what is the accuracy of these measurements? What do these values represent in physical space?

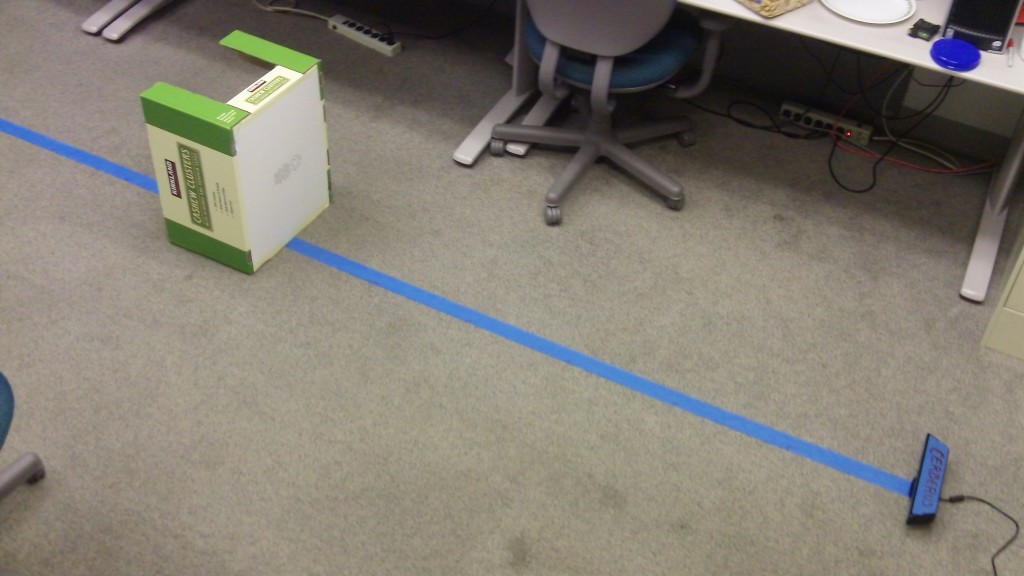

I conducted a very simple experiment to answer these questions. I placed the Kinect on the floor and also a long piece of tape orthogonal to the Kinect’s field of view. I then marked measurements along the piece of tape from 1ft – 10ft from the sensor. I placed a cardboard box in the center of the Kinect’s field of view for each hash, from 2ft all the way back to 10ft (1ft was within the minimum triangulation distance for Primesense’s PS1080-A2 SoC image processor). The experiment looked like this.

For each measurement I saved the rgb image, a color mapped depth image, and a text file containing the raw values from the depth array of the ROI containing the box. I used the color mapped depth image to reference my measurements and give me a little more confidence in their accuracy. The Kinect’s depth measurements within the ROI were all very similar, the maximum deviation from the mean is 2 for all values along the planar surface of the box. I averaged those values to come up with my measurement for each distance.

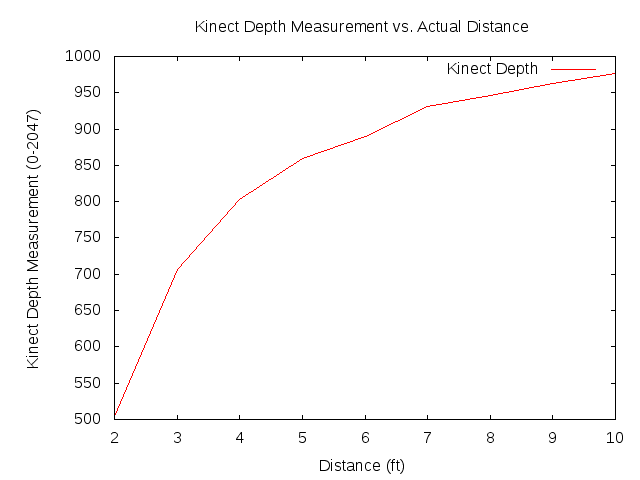

My findings tell me that the Kinect’s depth measurement as a function of distance is not linear… It appears to follow a logarithmic scaling. This means that the further you get from the sensor the less detailed depth information you’ll get about objects.

There is no interpolation or further calculations done on the graph above, it is simply a line graph. The actual results are shown below.

| 2 feet | 504 |

| 3 feet | 706 |

| 4 feet | 803 |

| 5 feet | 859 |

| 6 feet | 890 |

| 7 feet | 931 |

| 8 feet | 946 |

| 9 feet | 963 |

| 10 feet | 976 |

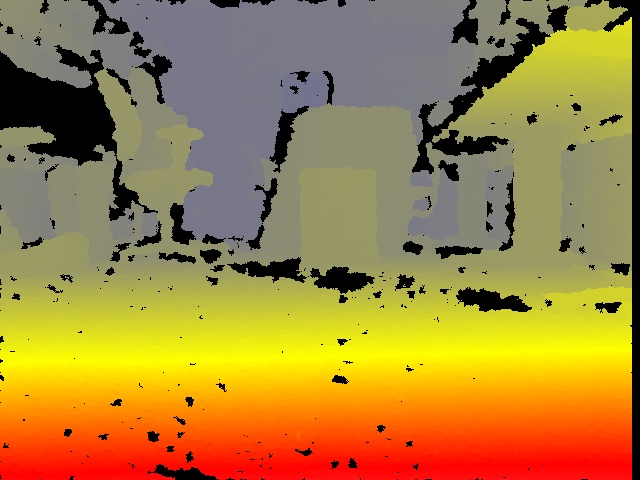

One sees the precision is significantly reduced with distance. The depth image shows the slow decline in color gradient the further away things get. Below, you can see the color mapped depth image and the rgb image as seen by the Kinect at the 10 ft hash. One sees that the box has roughly the same color as the majority of the objects at the same depth or further. This makes it difficult to discern meaningful information from objects at a depth of 10 feet or more.

I shared these results with my professor Dr. Xiuwen Liu at Florida State University. Below are the slides he made to share this information with his Computer Vision course CAP5415.

Conclusion

For my research I plan to register point clouds from multiple Kinects. If I take the findings above as true, it means that I will need to consider the relative depth of objects from each sensor when using many Kinects to reduce noise in the measurements.

*UPDATE* Playing with the Kinect has always been a hobby. My research has taken me into a very different field, and I am not savvy with the latest and greatest Kinect ideas/libraries/tools. I would not consider myself an authoritative figure on Kinect matters.

Hi Raghav,

For kinect with libfreenect…follow the following post…It worked well

http://mitchtech.net/ubuntu-openkinect/

Thank you so much for your dedication. Your post helps my project and saves big time. Keep up the good work. May God bless you.

My pleasure. I’m glad someone appreciates it. Good luck!

Pingback: Progress so far: Aesthetics & Programming | James Quilty

hi,

I am using kinect to mesure distance between head point and kinect sensor,

after i get these distances x,y and z, i use them to calculate pitch, roll and yaw

but how i can check these values are correct, because yaw, pitch and roll depends on x,y and z,

if x,y and z are correct then yaw, pitch and roll are correct

how can i check x,y and z values ?

Very interesting analysis! Nathan, is there any way to relate the depth information from Kinect to actual distance in cm?

Thanks for detailed work

Awesome! Just what I needed

thanks for ur guidance, my project is to locate the ball n then hit that ball, I need ur guidance to accomplish this task I will be thankful. GOD bless u

Hi Nathan,

(Urgent help required)

I want to ask a simple question. Kinect gives inverse images compared to other digital cameras.

Example, if an object is present in a scene at left position, kinect shows this object at right position in captured image.

Please tell me how to correct it or how to capture images and avoid it.

Thanks in advance

Hi Nathan,

Thank you so much for your post. it really helps me lot with my project.. i am wondering

how you got the distance values from the depth image?.. did you used any software or sdk.. i request you please help me with my question.. Thanks for your help.. my email id is navaneedonz@gmail.com..

It’s of great help for my project working on Kinect based robot hand. Thx

Hi Nathan,

Thank you very much for publishing this work. It was very helpful. I was working on getting accurate depth images using Kinect and I got a feeling that the readings were not really linear. I then did a quick google search and your work came up. It saved a lot of time. Also, I wanted to ask if you had a more dense data of ‘Actual Z vs. Measured Z’ (in an excel file or something). Otherwise, I would need to pick the visual data from the picture you posted and need to interpolate it.

Thanks

Raviteja Chivukula

I have learn some good stuff here. Certainly price bookmarking for revisiting. fcgkeefgggka

help me with the measuring of distance for an object using kinect?

hi nathan

i want to know that how to calculate the distance by kinect

are u taking the maximum value of the depth image while plotting the graph ?? this is a bit confusing for me that how u are taking only single value ??